Walk This Way: A Better Way To Identify Gait Differences

Biometric-based person recognition methods have been extensively explored for various applications, such as access control, surveillance, and forensics. Biometric verification involves any means by which a person can be uniquely identified through biological traits such as facial features, fingerprints, hand geometry, and gait, which is a person's manner of walking.

Gait is a practical trait for video-based surveillance and forensics because it can be captured at a distance on video. In fact, gait recognition has been already used in practical cases in criminal investigations. However, gait recognition is susceptible to intra-subject variations, such as view angle, clothing, walking speed, shoes, and carrying status. Such hindering factors have prompted many researchers to explore new approaches with regard to these variations.

Research harnessing the capabilities of deep learning frameworks to improve gait recognition methods has been geared to convolutional neural network (CNN) frameworks, which take into account computer vision, pattern recognition, and biometrics. A convolutional signal means combining any two of these signals to form a third that provides more information.

An advantage of a CNN-based approach is that network architectures can easily be designed for better performance by changing inputs, outputs, and loss functions. Nevertheless, a team of Osaka University-centered researchers noticed that existing CNN-based cross-view gait recognition fails to address two important aspects.

"Current CNN-based approaches are missing the aspects on verification versus identification, and the trade-off between spatial displacement, that is, when the subject moves from one location to another," study lead author Noriko Takemura explains.

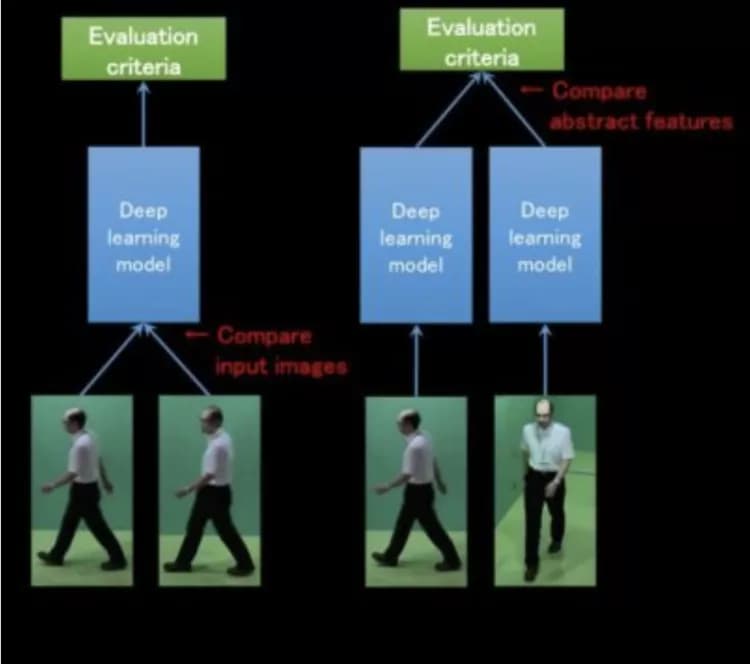

Considering these two aspects, the researchers designed input/output architectures for CNN-based cross-view gait recognition. They employed a Siamese network for verification, where an input is a pair of gait features for matching, and an output is genuine (the same subjects) or imposter (different subjects) probability.

Notably, the Siamese network architectures are insensitive to spatial displacement, as the difference between a matching pair is calculated at the last layer after passing through the convolution and max pooling layers, which reduces the gait image dimensionality and allows for assumptions to be made about hidden features. They can therefore be expected to have higher performance under considerable view differences. The researchers also used CNN architectures where the difference between a matching pair is calculated at the input level to make them more sensitive to spatial displacement.

"We conducted experiments for cross-view gait recognition and confirmed that the proposed architectures outperformed the state-of-the-art benchmarks in accordance with their suitable situations of verification/identification tasks and view differences," coauthor Yasushi Makihara says.

As spatial displacement is caused not only by view difference but also walking speed difference, carrying status difference, clothing difference, and other factors, the researchers plan to further evaluate their proposed method for gait recognition with spatial displacement caused by other covariates.

Materials provided by Osaka University. Note: Content may be edited for style and length.

Disclaimer: DoveMed is not responsible for the accuracy of the adapted version of news releases posted to DoveMed by contributing universities and institutions.

References:

Noriko Takemura, Yasushi Makihara, Daigo Muramatsu, Tomio Echigo, Yasushi Yagi. (2017). On Input/Output Architectures for Convolutional Neural Network-Based Cross-View Gait Recognition. IEEE Transactions on Circuits and Systems for Video Technology. DOI: 10.1109/TCSVT.2017.2760835

Related Articles

Test Your Knowledge

Asked by users

Related Centers

Related Specialties

Related Physicians

Related Procedures

Related Resources

Join DoveHubs

and connect with fellow professionals

0 Comments

Please log in to post a comment.